Pino Log 2024.0.1 - an Offline First Domestic Robot

I have been working on a domestic robot/system. Its name is Pino.

Personal

Intelligent

Network with

Open-endedness

The acronym, if not omitted entirely, is also a work-in-progress.

At present, the hardware, components, body, movement, etc, are all a bit elementary, I must admit. But it is an absolute blast to learn and work on -- as if the ultimate trifecta of hacking and engineering (hardware, software, and movement within reality).

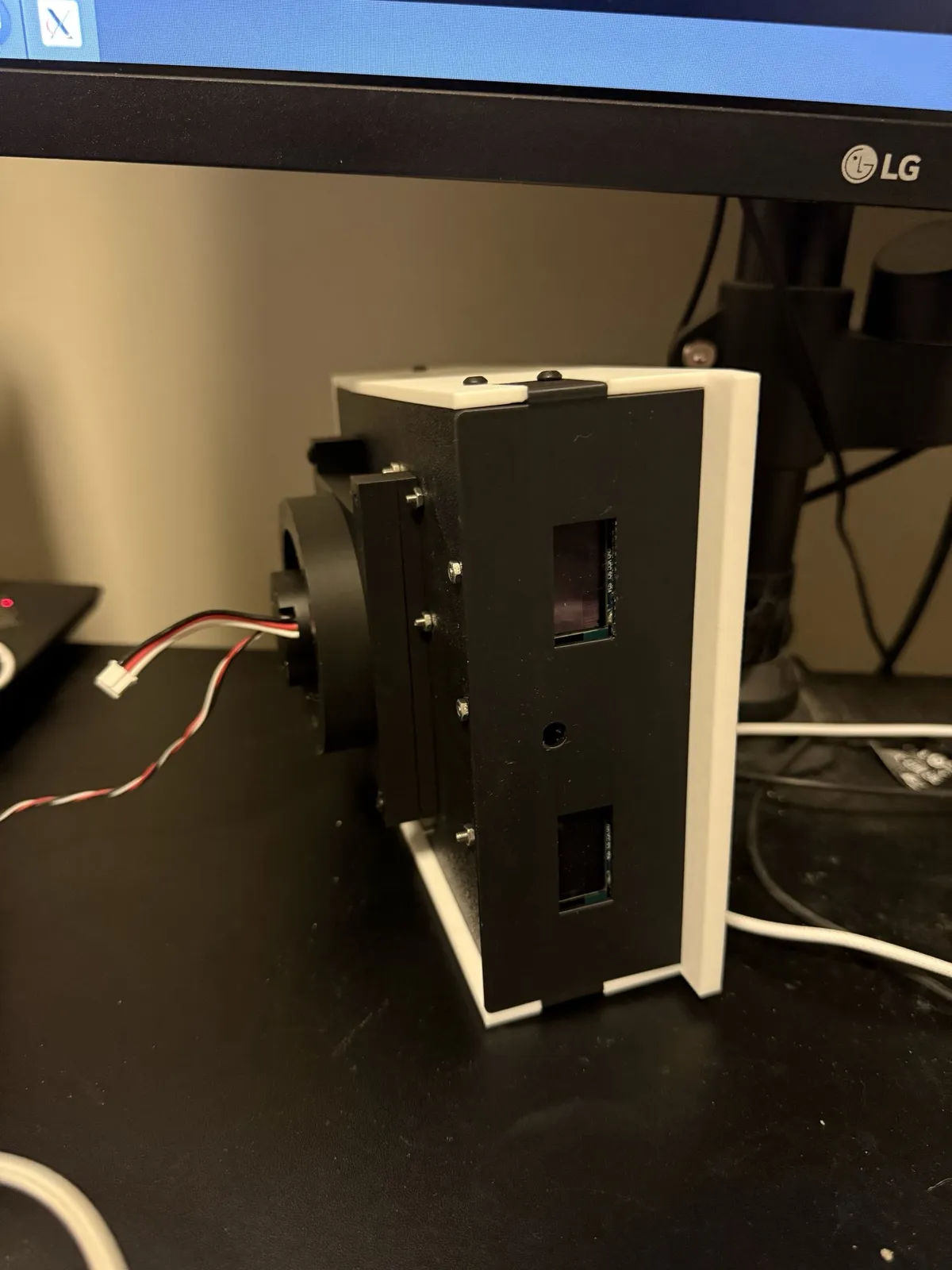

Here's a short clip of the first time I was able to power and control the eye displays with an rpi.

When I first started the project, I envisioned everyone having a personal domestic robot. Then I realized, perhaps what everyone will have is a personal domestic local network, comprised of a multi-agent/multi-model system. And a robot would simply be a manifestation of that system.

Privacy and offline-first (local-network disconnected from the internet), are non-negotiables in Pino. Which means everything should be able to run on local hardware without an internet connection. Well, that's the goal at least.

But first, I just want something to follow me around, listen to my rambles without me holding a device, and debrief me on whatever I said to it given an arbitrary time range parameter. So I learned how to do basic CAD in fusion-360 and printed the base body, head, and neck.

Then I started thinking through how pino might actually get around. So I modeled and printed some basic legs and wheels that simply won't work; they couldn't even hold the base body or move in any direction besides forward and backwards. But hey, that's prototyping and learning.٩(^ᴗ^)۶

I opted to use gimbal motors (ranging in diameter) for actuators. They're just so smooth! After ordering and playing around with some gimbal motors I started to look into motor controllers... Let's just say, I've paused the movement functionality for now.

It was around this time that I figured I was getting a little too ahead of myself. CAD and printing is super fun but whatever manifestation you build is somewhat useless if you don't have a request processor (consider trying to drive a car without an engine).

So I pivoted to work on speech recognition (openai's whisper model), an elementary natural language to request mapping (an abhorrent mess of rust code), and text to speech (flite c library with rust bindings I wrote). This was actually pretty viable running on macos and debian. But when I tried to run these modules on a raspberry pi... well you can probably imagine how slow it was.

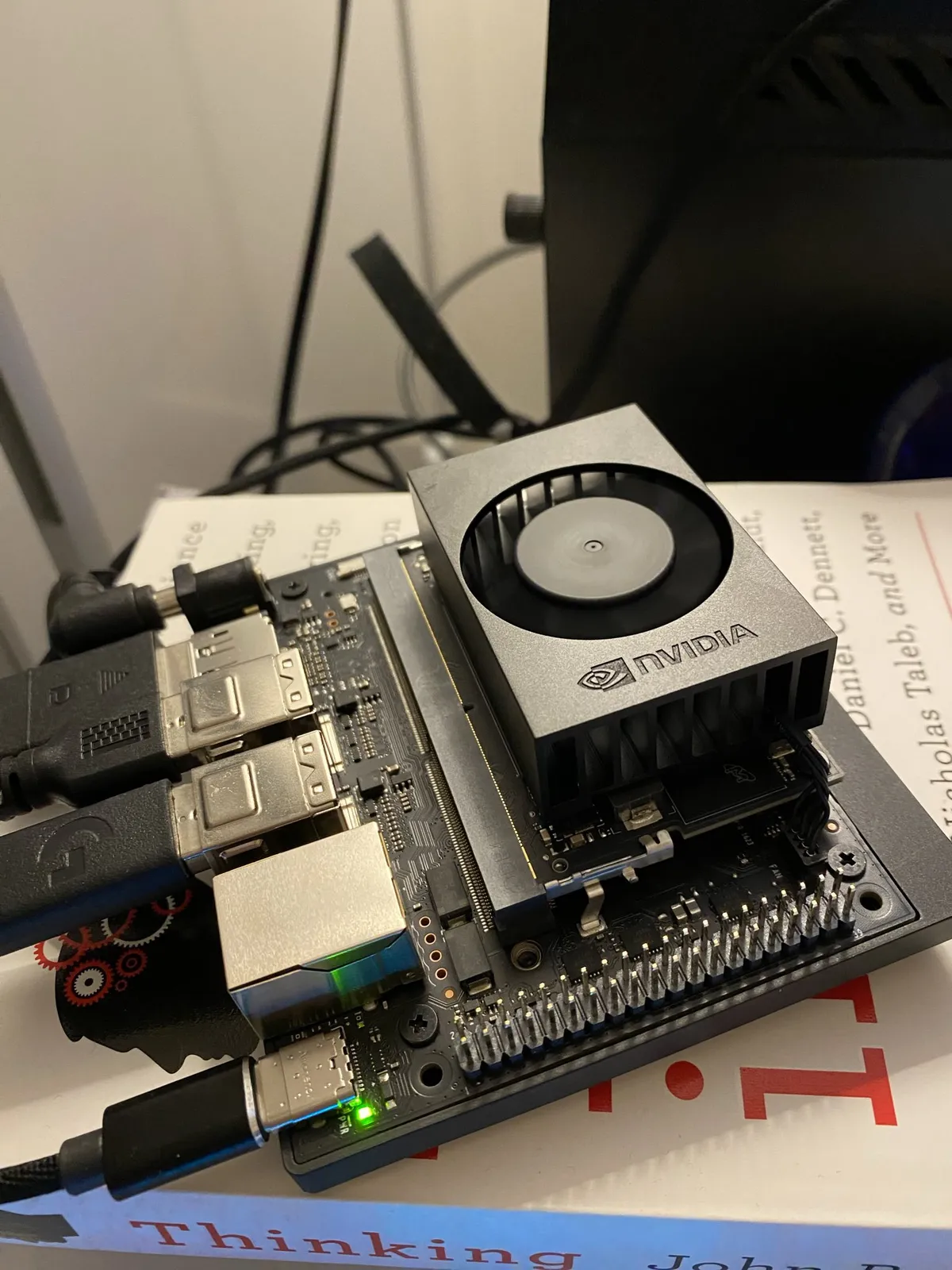

This gave me the perfect excuse to reach for an Nvidia Jetson Orin board. I wanted to eventually move to a small llm as the nlp engine and the orin boards have awesome computer vision capabilities so I figured the hardware upgrade was justified (probably cope). I also envisioned multiple orin boards being installed.

And of course, the board wouldn't boot when I received it. ಠ▄ಠ

After a few days of madness, running an ubuntu docker container on a debian host (cringe), flashing, swapping out driver packages, flashing again, and other torture, I was finally able to get it to boot.

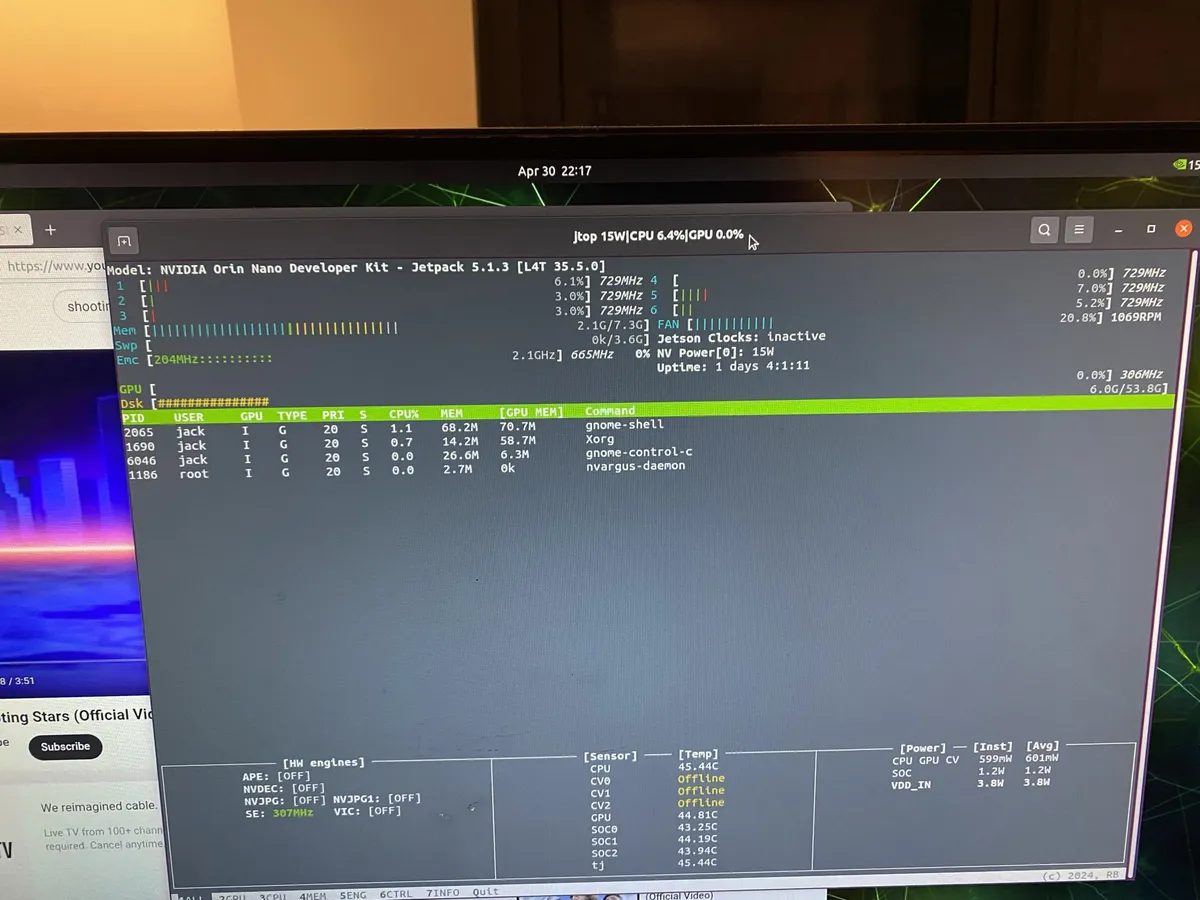

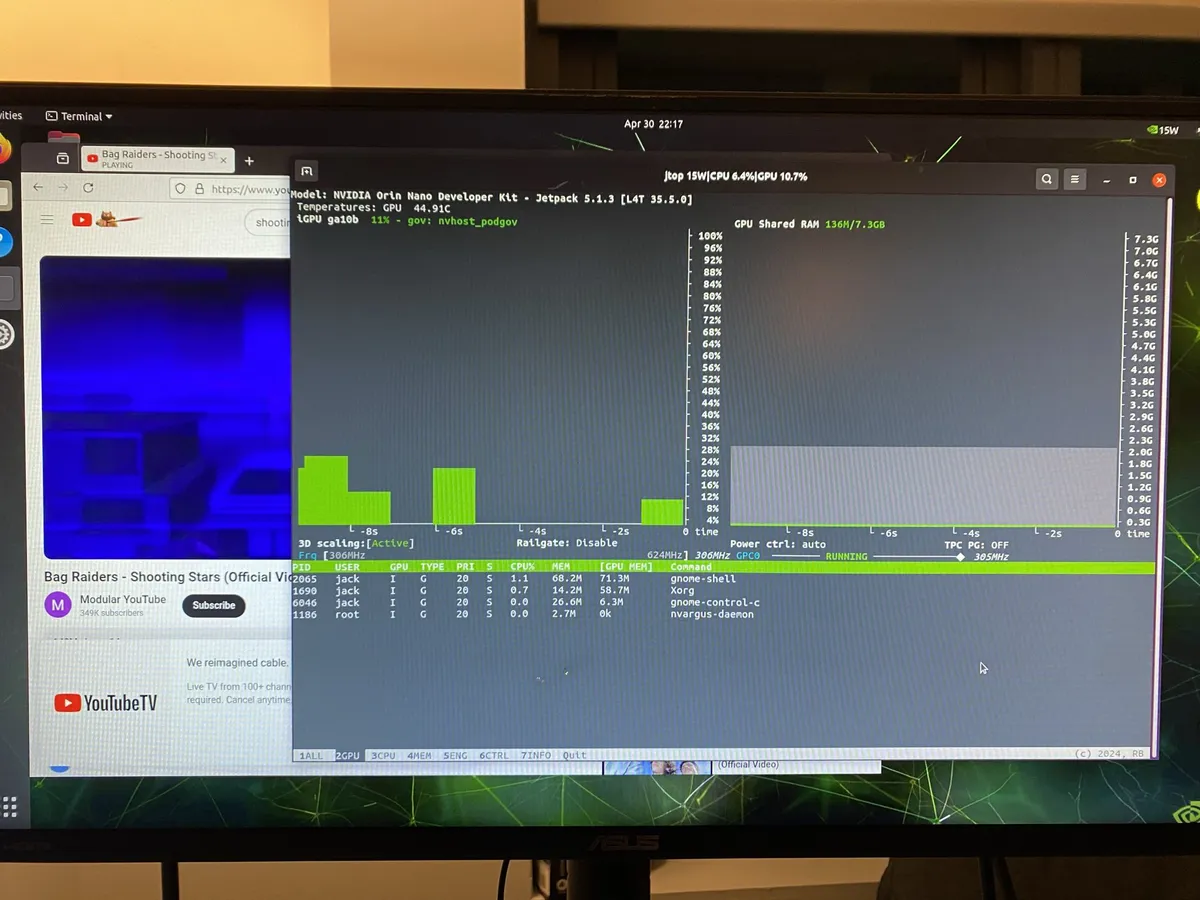

Below is running jtop and listening to shooting stars on the orin board.

Which brings us to the present, it's been a few months since I've been able to work on pino (work burnout), but we must push forward.

My current priority is to go end-to-end with a speech request, something you might say to siri, remind me... what's the weather this weekend... add 20g of protein to my daily intake etc. Then I'll determine where to go from there.

In the meantime, I'm planning to swap out the front face with a 7 inch HDMI monitor and program the "face" with egui. we'll see.